I don’t blog enough to be topical or cover current trends, and I like to keep things light and funny, or positive and optimistic in general. In fact, at the end of 2022 when I actually tried to be topical and write about artificial intelligence, I did it from a pretty altruistic standpoint with the hope that working with the changing landscape that a.i. isn’t going anywhere is a better approach then railing against it. At my core, I still believe that, and I’ll put a pin in that for now, but today I’d like to revisit a.i. with a little more experience after having had the wonderful opportunity to visit the Adobe Max Conference in Los Angeles as an attendee.

At the Adobe Max Conference, October 2023 where I got to meet Aaron Draplin!

I say, “a little more experience” because not long after my initial blog post about a.i. in December, I followed that up in April of this year (I told you I don’t blog enough to be topical!) with a post detailing my impressions using ChatGPT and Dall–E which very quickly became dated after both platforms made serious advancements technologically. I think for most people at that time, a.i. was still a fun and funny little moment of pop culture that humorously added to the zeitgeist with things like Keaton Patti’s bananas Olive Garden commercials and Trump Rallies—all created by feeding hours of those particular brands original content into a.i. bot programs. Maybe the undercurrent had a slight worrisome tone of the inevitability of robot overlords, but it was still relatively light–hearted and quaint. Who could have ever imagined we’d be pining for the simpler times of 2022.

Of course, like most technological milestones, once something starts to get traction, it really takes off. Granted, a.i. has actually existed since the mid 20th century (starting with the Perceptron Mark I in 1957), but really started to generate public interest in the 80s with the goal of revolutionizing computer processing. It’s directly because of this that artificial intelligence has thrived recently—not so much because tech geniuses have learned more about a.i. themselves—but rather because computer storage, memory, and speed have increased beyond what most average consumers even need. And in 2023, Dall•E released its latest text–to–image model featuring significantly more nuance and detail, and ChatGPT became the fastest growing consumer software application which now offers it’s GPT–3.5 engine operating its services on a freemium model while ChatGPT Plus offers its GPT–4 engine to users for $20 USD a month. If that’s not impressive enough, as of this post, ChatGPT itself isn’t even a full year old yet having launched in November of 2022! In March 2023, Adobe released its generative a.i. tools to Photoshop in beta testing. Today, those a.i. tools are fully integrated into Photoshop and Firefly a.i. is in beta for Adobe Illustrator—creating editable and functional vector illustrations—as well as Adobe Premiere Pro which also offers beta tools for speech to text editing for videos. It is insanely easy to use and saves lots of time.

All of these images on Adobe Stock are generative a.i.

Let’s step back and address just a couple quick bullet points I made previously regarding a.i.’s learning technique. Now initially I made a mistake and thought the process was called stable diffusion, but that is in fact the name of a latent diffusion model developed by a company called Stability AI. Artificial intelligence uses machine learning to develop a deep knowledge of whatever subject it’s tasked to create. I’m cutting out a lot of context here for the sake of brevity, but imagine a robot who has the capacity to instantly read every single book on a particular subject so that it can then use that knowledge to compose its own creation based on that immediate education. It only has the information it has acquired, so it bases everything it can do on that information alone. I read hundreds of text books about various software programs when I was in school, but it almost all felt useless after I actually started working and realized experience was vastly more important. As a result, most of my initial professional work looks ridiculous, much like what a robot who only learned from reading and not actually doing.

Now imagine that same robot is instructed to paint a masterpiece, but in order to do that, it has to visually take in every painting currently on display in the Louvre. So it rushes around the museum and sees works from DaVinci, Géricault, Michelangelo, and Jacques-Louis David. Afterwards, you ask the robot to paint its own masterpiece, but upon completion you notice it hasn't really painted anything original, but rather cut and pasted elements like the Mona Lisa's smile or Roman columns from the Oath of the Hoaratii. It's specifically these issues that have a lot of creative people feeling pretty upset because the robot hasn't actually created anything, it's just stolen components from others. But then you also notice that because the a.i.'s creators have basically told this robot to go out and learn everything from the internet, you start getting into real troublesome areas because if you haven't noticed, there's some pretty horrible stuff online and it's not exactly hidden either. So now on top of being an art thief, the robot has also learned to be racist, sexist, and creepy. You know, like actual real life people.

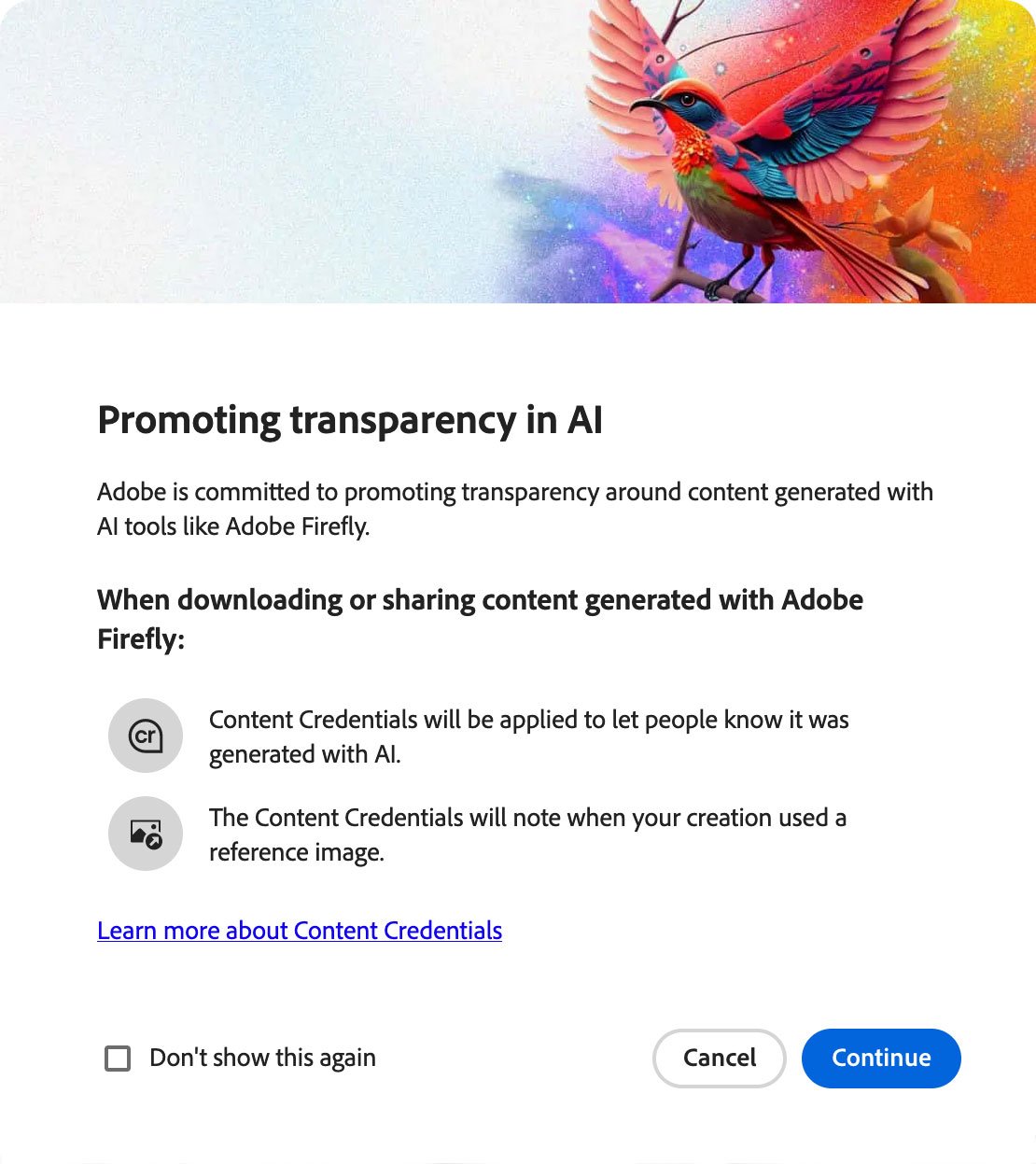

Again, I'm really compacting a lot here to keep things from getting bogged down with technical jargon, but these are real concerns that have had companies like Adobe make serious public efforts to proactively promote responsible guidelines for generative a.i. learning and sharing, as well as protecting intellectual property and reflecting diversity in a positive way. For the most part, this corporate responsibility and good faith approach has been necessary, not just because of the reactions from creatives worldwide, but because of the accountability these types of organizations can be held to legally. Remember, theft of any kind is generally frowned upon.

So now we get to the Max conference where the undeniable star was Adobe's generative text–to–image a.i. program, Firefly. All over the conference floor, in its classrooms and displays, and promoted heavily at each keynote session were strikingly beautiful images all created by artificial intelligence. What Firefly offered was so prominent that it sometimes felt invasive. Is it cool and will it save designers from doing tedious things like masking, editing, and rough concepts that require super quick turnaround? Oh man, you bet your a$$ it will. But as one classroom speaker jokingly noted at the beginning of one session that it was the only conference event not promoting any new artificial intelligence tools, he was met with a roaring standing ovation.

Adobe competently showed that it's a.i. deep learning methods were trained solely on its own library of photographs, illustrations, images, and graphics (pretty much its entire stock library) and that its engineers were working tirelessly to integrate guardrails so that diversity and inclusion were represented equally and respectfully. So there you go! Problem solved. You can stop worrying now about everything. Robots are kind, love is love, intellectual property theft is a thing of the past, and the system works great.

Even if all that were true, there's still a hiccup or two. Now put your personal feelings about a.i. aside for just a bit and let's pull that pin out regarding my optimistic outlook from earlier. The cold hard fact is that a.i. isn't going anywhere, and just by looking at what was just a year ago and what is now today, it's pretty obvious that what a.i. can create is only going to get more impressive and it's going to be up to everyone to ensure it's guided properly, safely, and responsibly. Up until the Max conference, I could be heard saying, "Man, if this is where it's at now, imagine what it'll be like in ten years!" But that was out dated thinking when I first wrote about a.i. Especially when Adobe has expressed its expectation to see user generated a.i. images increase 5 times more in the next 3 years than it currently already has which is already over 15 billion!

So while ethically we have a lot of work to do, I think it's also fair to say that we're currently pretty early on in this saga and we're already experiencing some pretty heavy a.i. fatigue, and to that point, I'd like to redirect your attention to stock images. I love stock images, like I adore stock images and I've written about them before (and it's a funny article I'm really proud of too), but because I almost exclusively use Adobe Stock, and because Adobe Stock is the epicenter of Adobe a.i.'s learning process, it's kind of saturating the store with its own product and nothing else. Imagine going to your local grocery store, and all they sold was their brand of corn flakes. No produce, no deli, no butcher; just aisle after aisle of varying sized boxes of store brand corn flakes and nothing else. Because Adobe's generative a.i. has come along so far and has gotten so good, it offers generative a.i. images as stock image options. Originally you'd see one ore two pop up, then it became the majority of what was offered. Now depending on what you're looking for, a.i. generative images can be all that's available.

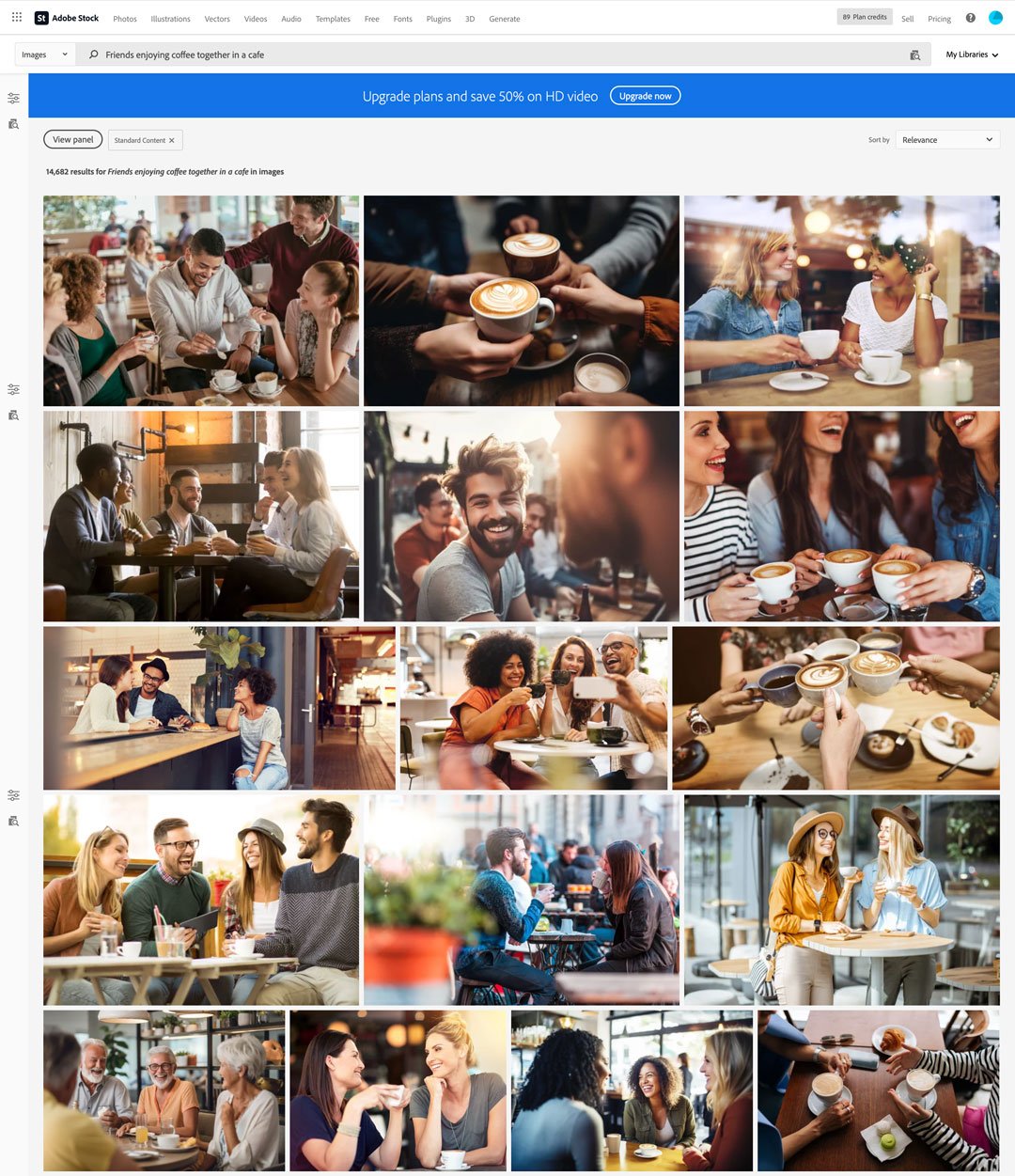

Real quickly if you haven't read my post on stock image sites; they provide a designer access to photos, graphics, templates, or illustrations the designer wouldn't otherwise have time to create themselves. Creating an ad for a new coffee chain? I can search for something like, "Friends enjoying coffee together in a cafe" on a stock image site just like I might look for something in a search engine and I will get various results that will hopefully match the look and vibe I'm going for.

So just to clarify, I don't have an issue with a.i. generated images. The quality is really good and getting better. There will be times when an a.i. generated image is much better than anything else the stock image site is offering, but it's frustrating how much of it there is. When searching for "Friends enjoying coffee together in a cafe", the language in the search itself is referring to a very human experience. The generative a.i. image is good, but it's not perfect. Plus I think there's a subconscious bias that I personally have that (at least in this instance) using something that was not created by humans but that is supposed to be representative of a human experience does not feel like a genuine, intentional choice to encourage others to buy into the design I'm making.

This image was generated by a.i. Can you tell?

I realize there's so much to unpack there. I'm using a computer to design this resource, is it really that bad that I'm requesting a computer create an additional element to that? Will future designers be less likely to have such a bias if they grow up understanding how ubiquitous this technology is? How "human" does an image have to be to properly reflect a human experience when we're all already familiar with shorthand cues like seeing people in such settings—photographed, illustrated, or otherwise—that creating that established connection is even necessary?

For their part, Adobe has a very clear and up front policy regarding their ethical standards and practices regarding posting, hosting, sharing, and creating generative a.i. images. Now people will point out a lot of that responsibility falls heavily on the users respecting that system, and this is what I personally believe is at the heart of all of this and creates the endless loop of debate surrounding this. Policing people from playing with this technology may be noble, but it's also antithetical to creativity in general. I'll repeat it again:

Artificial intelligence is not going anywhere and will continue to develop, but a.i. is a mirror reflecting back on the people who use it, interact with it, and engage others with it. Just like the world we live in, what we put in will be synonymous with what it gives back.

A.I. images generated from Dall E 2 and Firefly using the prompt, “Renaissance painting of a black cat in a pink tu tu”

But what do you think? Is a.i. the cool new future that will bring the world peace and prosperity? Is this the beginning of the end? Will a.i. steal jobs from creative people as well as blue and white collar workers? Or am I just fixated on the loving memory of our cat Destiny and want a.i. to realize how special she was like my wife and I do?

Our actual (late) cat Destiny killing it better than any artist—a.i. generated or otherwise—ever could. Rest in Peace, sweet girl.

Thanks so much for stopping by, and I really would love to hear your thoughts on this. Please follow me on Instagram and let me know! Also like last time, here are more sources as well as some other great articles about a.i.: